One of the features I really want to play with in the rover is some kind of location awareness combined with obstacle avoidance.

There are a lot of ways to do this of varying effectiveness based on the situation.

In one of my many boxes of maker junk I had an XBox 360 Kinect, which like the hoverboard was a thing lots of people threw time at hacking several years ago.

The 360 Kinect is old hat now and more modern sensors do more but I've got the Kinect. More importantly if I want to do things like cut the cable down, remove the case to slim it down etc. etc. they can be had for less than the cost of postage on eBay. So I've bought second one and it's on its way.

The Kinect is a combination of a decent conventional RGB camera, infrared camera and an infrared projector that throws a mass of points of infrared light at the scene. Onboard processors then turn that into an RGBD (red/green/blue/depth) image by mapping the distortion of the infrared dots.

The problem with old open source software is much like old closed source hardware: it stops working.

Not in the fact it's fundamentally broken but the shifting sands of time and internet entropy means once it's no longer heavily maintained and interested people move on links break, software versions change and stuff gets deprecated.

You can still install it from binaries. The SBC I actually wanted to try the Kinect with installed them just fine but then it failed to work with no obvious error.

Moving on to a laptop running a different Linux variant the binaries installed and worked but without sound. The Kinect firmware is missing from the binaries. The firmware is a Microsoft blob of proprietary stuff so that's understandable but the script to download that firmware is missing too. I don't have an immediate need for the onboard microphones but it irks me.

So I shifted to building from source and the instructions are wrong.

Not hideously wrong but links to libraries and git repositories were wrong or out of date and some default installed utilities needed are no longer there. So I muddled through and got it built. If I have a second go at building it, which is inevitable, I'll try to write it up and correct the OpenKinect Wiki.

My being able to correct these problems is one of the strengths of open source software but I fully understand why a lot of people find it frustrating. When I first got the Kinect out of the box it's been lurking in for several years to prove it still worked I installed the Microsoft drivers and developers kit on Windows 10. The Kinect immediately gave results ten years afters release. It 'just worked' in the way everybody moans Windows doesn't.

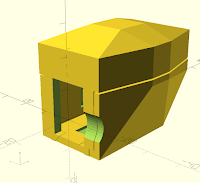

In parallel with this I've been wondering how to mount the Kinect so as per usual it's 3D printing to the rescue.

I didn't like the look of any of the mounts found on Thingiverse (one of them I tried just didn't fit) so I designed my own. Making very specific module/object mounts for prototyping things seems to be a thing I spend a lot of time doing. The end result is quite nice so I've uploaded it for other people to use.

I've now made a 'deck' on top of the rover that protects and hides the messy motor, driver, battery and power supply wiring. The battery puts out 31-40v but I need 5v and 12v (maybe 3.3v too) buses to drive the control stuff and this wiring begins to take up a chunk of space. There's also on/off switches, battery isolator, charger connections and so on. Deck is a fancy word it's just another bit of plywood from a skip with stuff screwed to it haphazardly.

First on the list screwed to the deck was the Kinect on its 3D printed mount.

The thing with the Kinect and frankly any other kind of RGBD sensor is they are quite 'heavyweight' sensors in terms of processing power. You get depth information but it's not an easily digestible map; it has to be processed extensively to act on.

This is on top of all the limitations the sensor has physically. It just can't be relied on to reliably prevent collisions etc., even before you allow for any mistakes in my coding and setup.

If you look at almost any autonomous vehicle they will have a whole suite of sensors to keep things safe and to that end I've made an array of three old-school ultrasonic range finders on the bottom of the rover in another 3D printed enclosure. They fit quite nicely between the two motor mounts.

These ultrasonic sensors are decades old tech but you still find them in beginner robotics projects because they're cheap and highly effective at short range. They too have limitations, but should be an OK backstop for making it harder to drive the rover straight into a wall or perhaps more importantly into somebody.

Running these ultrasonic sensors is an old-school Arduino Nano, which frankly is perfect for this task. I may buy another three ultrasonic units and stick an identical array at the rear. The rover essentially has no front other than what I've chosen by sticking the Kinect at one end. It can move and turn the same in either direction. Given how cheap Kinects are I may investigate if it's possible to connect two to one computer but I suspect the answer might be no.

Reading around the topic I've found basic microcontrollers like older Arduinos can be used to feed information into Robot OS (ROS) over USB serial quite easily. So connecting up a bunch of basic sensors pre-processing their outputs and generating something easy for ROS to digest is where I'll concentrate before getting into any complicated mapping.